Missing information from last weeks meeting? Ask Tucan!

Learn here how you can use Tucan.ai to automate your meetings and create a smart knowledge archive for conversational content:

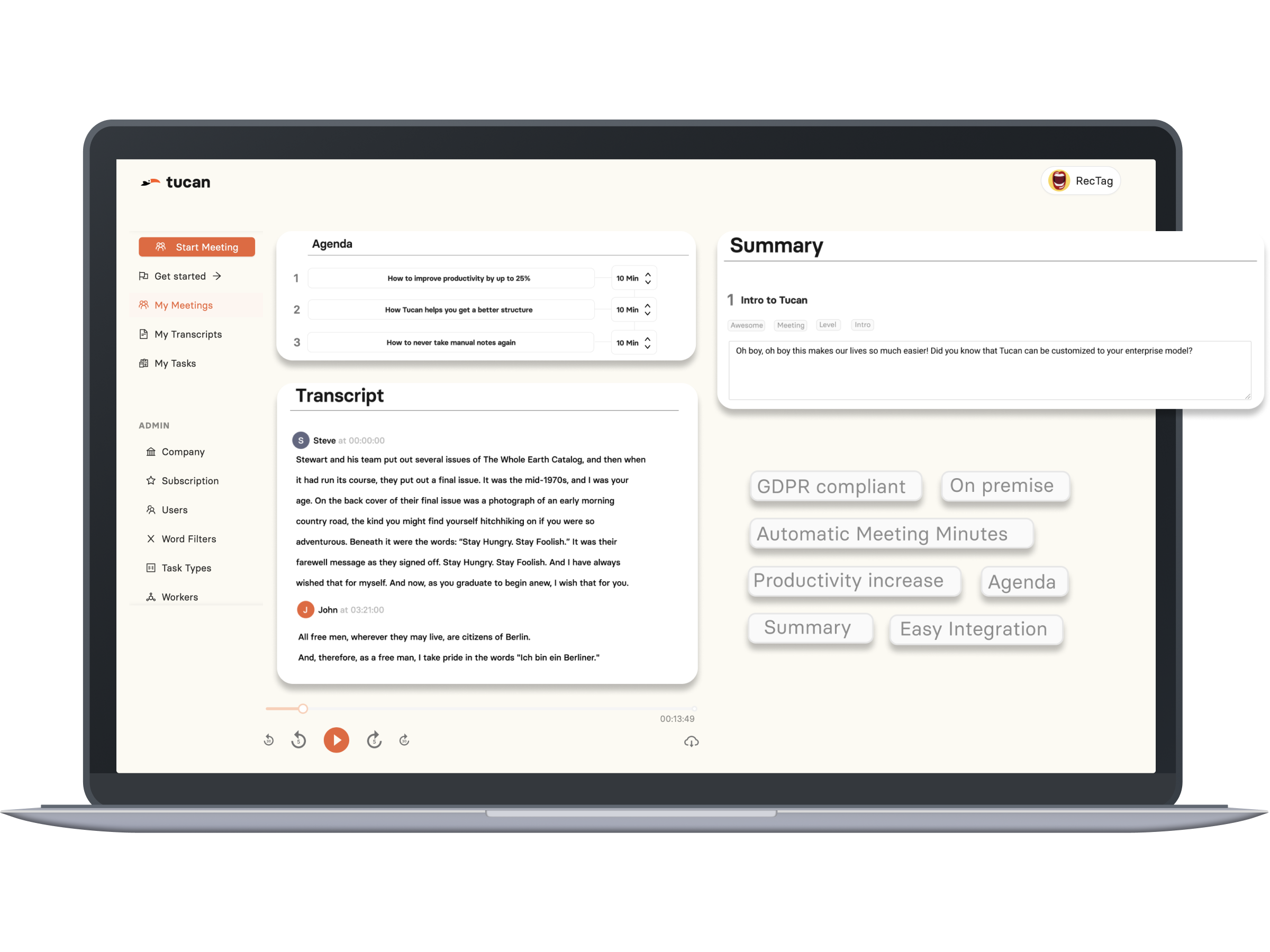

Step 1: Connect your conferencing apps and other tools

Tucan.ai integrates with popular calendar and video conferencing apps such as Google Calendar, Zoom, Microsoft Teams, Google Meet and more. You can easily connect your accounts and invite our bot to join your meetings as a participant. Tucan.ai will automatically record the audio of your meetings and upload them to its secure cloud platform. Alternatively, you can upload any audio or video file to your account yourself. Tucan.ai will take care of the rest.

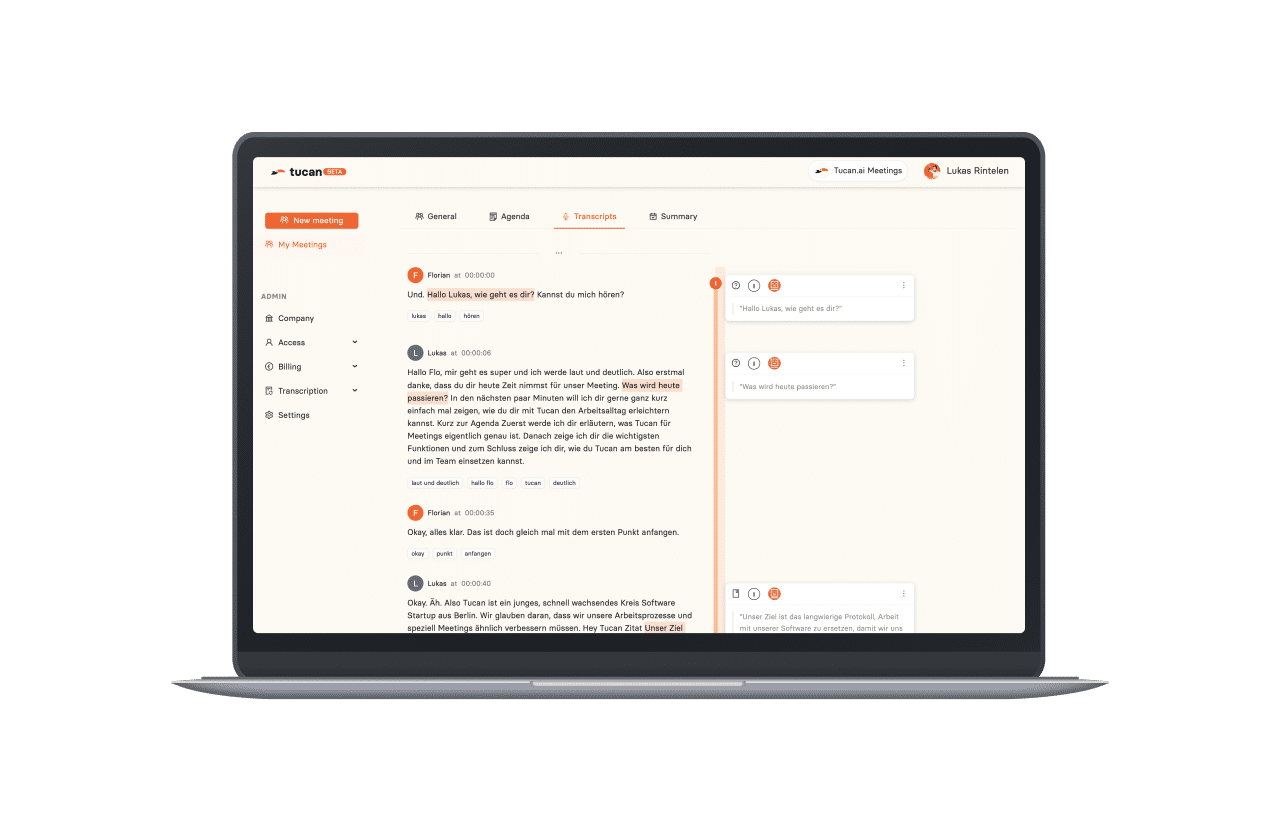

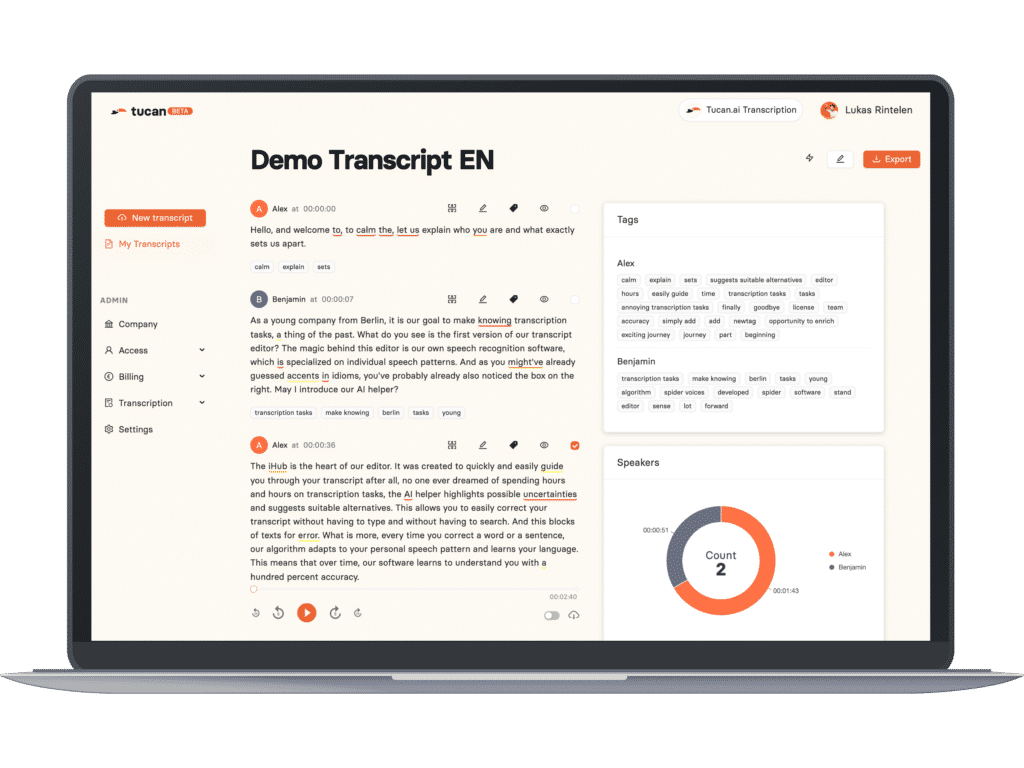

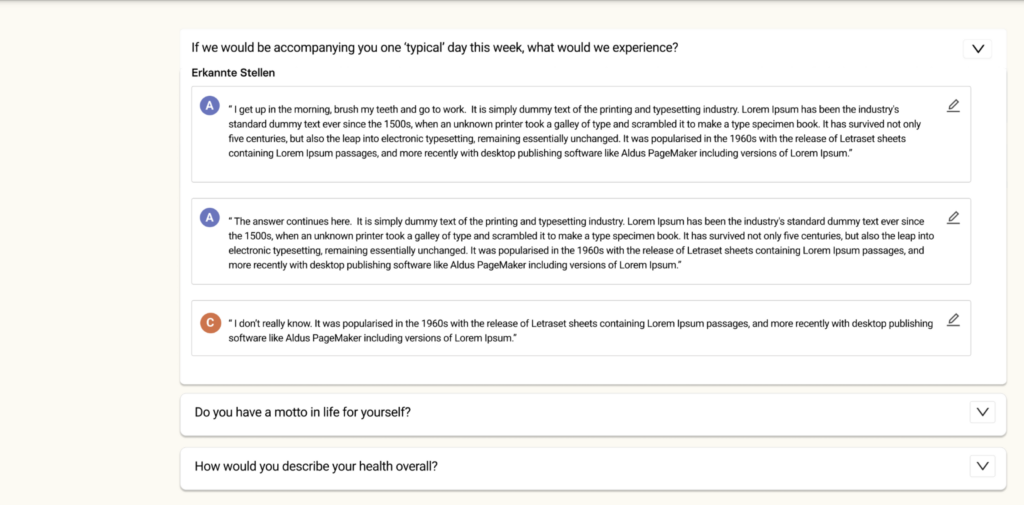

Step 2: Review, edit and share the content provided by Tucan.ai

Right after your meeting Tucan.ai generates a transcript and a summary using its own speech recognition algorithms. You can easily edit, annotate, highlight and share content with your team members, followers or clients. Furthermore, it is now even possible to ask questions about past meetings and get answers based on the gradually improving speech recognition and natural language understanding capabilities of our AI.

Step 3: Keep track of and manage your conversations with ease

Tucan.ai also provides you with data and insights from your meetings, such as talking times, sentiments, action items, keywords and topics. These metrics may be used to improve your communication skills, track your progress, identify gaps or opportunities and optimise your task management. You can also integrate Tucan.ai with other tools like CRM systems, project management and collaboration platforms to automate your entire workflow.

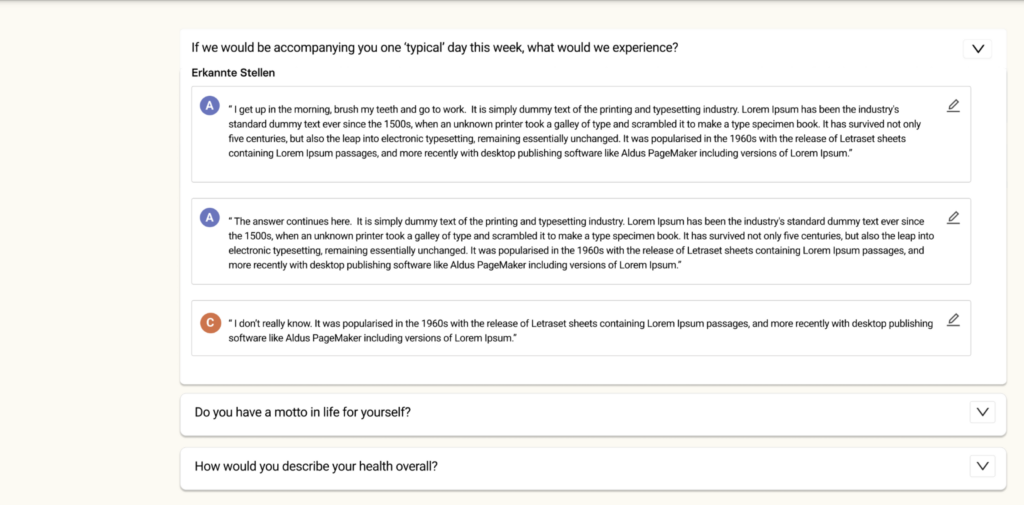

Step 4: Gain deeper qualitative insights through automated encoding

Tucan.ai also offers a smart feature which allows you to get your interviews and focus groups encoded automatically on predefined categories or themes. This function is particularly well-suited for market research, opinion polling and similar fields that rely on qualitative data analysis. You can use Tucan.ai to extract relevant insights more quickly and accurately from your conversations without spending hours on manual encoding. Learn more in our factsheet "AI-powered encoding with Tucan.ai":

Step 5: Use our new prompt feature for swift inquiries and analyses

We are constantly developing new functionalities to enhance Tucan.ai’s capabilities. One of our most exciting new releases allows you to prompt various kinds of data extractions and summaries from your conversations. For example, you can ask Tucan.ai – almost like a company-internal GPT – to provide you with the contents needed for a SWOT analysis from strategy meetings or customer persona from sales calls, and it will get back to you with customised outputs based on your prompts.

Outpace your competition - Book a free consultation call with our CEO Florian!

In case you wish to learn more about Tucan.ai's solutions for teams and enterprises, please schedule a short online call with our founder and CEO, Florian Polak.

CUSTOME VOICES

What they say about us

"We at Axel Springer have been using Tucan.ai for already over two years now, and we continue to be very satisfied with the performance of the software and the development process as a whole."

Lars

Axel Springer SE

"I have known the founding team for over a year. At Porsche, we are very satisfied with their work so far. I have recommended the use of Tucan.ai to my colleagues and business partners and I have been getting highly positive feedback back across the board - both on the service and the software."

Oliver

Porsche AG

"Tucan.ai has been a game-changer for our team. The software is incredibly intuitive and easy to use. It has saved us countless hours of work and has allowed us to focus on what really matters - our clients. I would highly recommend Tucan.ai to anyone looking for an AI-powered productivity tool."

Alex

Docu Tools

The new AI frontier: Short introduction into the most common language models

Language models can perform a variety of tasks, including writing essays, summarising articles, answering questions, creating captions and even composing music. But not all of them are created equal. There are different kinds of language models, each with its own strengths and weaknesses. In this blogpost, we will explore the three major types which have emerged as dominant, and we will discuss why and how they can be extremely beneficial to us.

Large language models

Large language models are the behemoths of the language modeling world. They are trained on massive amounts of text data, sometimes billions of words, from various sources such as books, websites, social media, and news articles. They use deep neural networks with hundreds of layers and billions or trillions of parameters to learn the statistical patterns of natural language.

Some examples of large language models are GPT-3 and -4, BERT, and T5. These models can generate coherent and diverse texts on almost any topic, given a suitable prompt. They are also able to perform multiple tasks without any further training, by using few-shot prompting techniques. GPT can write movie reviews, solve math problems, take college-level exams, and even identify the intended meaning of a word.

Large language models are impressive, but they also have their limitations. Firstly, they require plenty of computational resources to train and run, which makes them expensive and inaccessible to many users. Secondly, large language models consume a lot of energy, which raises environmental concerns. Lastly, they may inherit biases and errors from their training data, which easily leads to harmful or misleading outputsl

Fine-tuned language models

Fine-tuned language models are the specialists of the language modelling world. They are trained on a specific task or domain, using a smaller amount of data that is relevant to the task or domain. They use pre-trained large language models as a starting point and then fine-tune them on the task or domain data.

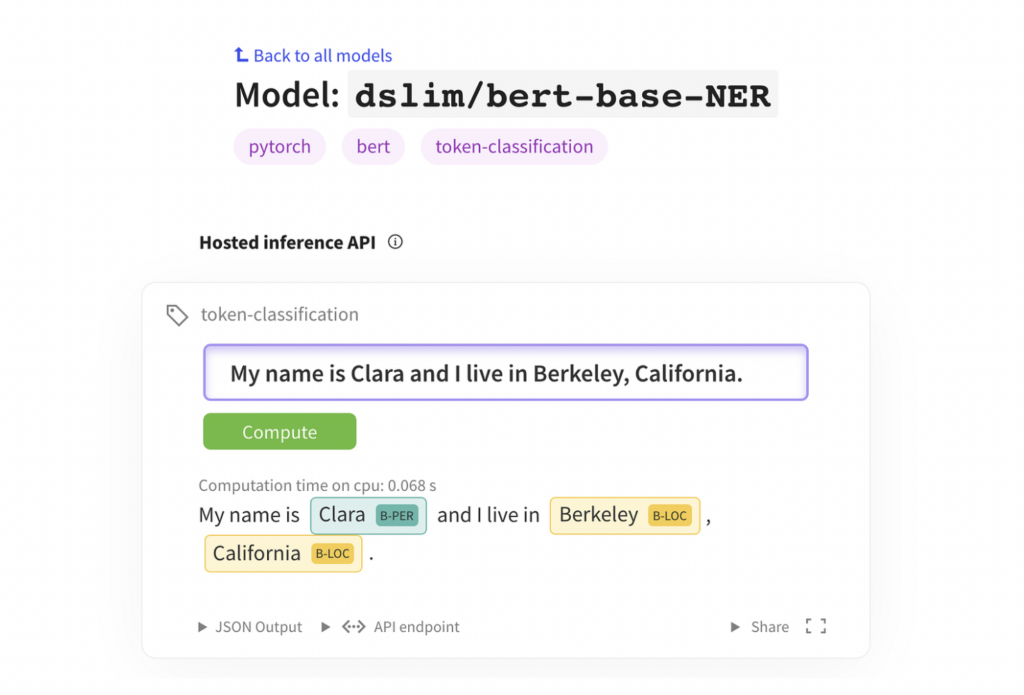

Some examples of fine-tuned language models are OpenAI Codex, GPT-3 Ada, and BERT-base-NER. These models can perform tasks that require more domain knowledge or accuracy than large language models. For example, OpenAI Codex can generate computer code from natural language descriptions2, GPT-3 Ada can write high-quality medical texts3, and BERT-base-NER can recognise named entities in text.

Fine-tuned language models are powerful, but they also have some drawbacks. They require more data and expertise to train than large language models. They may also overfit to the task or domain data and loose some of the generalisation ability of large language models. Furthermore, they may still suffer from some of the biases and errors of large language models.

Edge language models

Edge language models are the lightweights of the language modeling world. They are designed to run on devices with limited computational resources like smartphones, tablets or smartwatches. Edge language models use smaller neural networks with fewer layers and parameters to generate natural language text.

The most prominent examples are TinyBERT, MobileBERT and DistilGPT-2. These models can perform tasks that require fast and local processing of natural text. For example, TinyBERT is able to answer questions on mobile devices, while MobileBERT can create captions for images on tablets and DistilGPT-2 has the skill of writing short texts on smartwatches.

Edge language models are definitely convenient, but they are also challenging, as optimising their size and speed without compromising their quality requires more engineering effort. Furthermore, they lag behind the state-of-the-art performance of large or fine-tuned language models on some other tasks.

Why language models matter

Language models matter because they enable us to communicate with computers in natural ways. They also empower us to access information more easily and efficiently. And they inspire us to create new forms of art and expression.

Imagine you are planning a trip to Paris and you want to find the best hotel, the cheapest flight, and the most interesting attractions. You could spend hours browsing through different websites, comparing prices, reading reviews, and making reservations. Or you ask a language model to do all that for you in minutes.

Language models are not only useful for travellers, but for anyone who needs to communicate with others or access information. They can help us research stories and write stories, but also learn new languages.

However, they do also pose some risks that we need to address. They may easily generate false or harmful information that can misinform or manipulate us, or they may amplify existing social inequalities or create new ones by excluding or discriminating against certain groups of people.

We need to be well-aware of the capabilities and limitations of different types of language models in order to use them responsibly and ethically. And we need to engage in continuous constructive collaboration and dialogue with researchers, developers, policymakers as well as users of language models.

You want to test Tucan.ai for your Company?

Book a free consultation call!

Seven Smart Software Tools Every Legal Team Should Use

In order to demonstrate your value to an organization, one of the things you should consider utilizing are smart tools – any machines, devices, or software that aid in completing electronic work. These tools help with efficiency, accuracy, cost savings, and collaboration. In other words, they make your team’s life much easier. In this post, we’ll cover seven of the best smart tools every legal team should use.

Practice Management Software

Legal professionals handle many operations on a day-to-day basis. These activities include time tracking, case management, and billing, just to mention a few.

However, because some of these activities are tedious and time-consuming, you should consider utilizing practice management software. They ensure that your business runs smoothly and critical information is stored safely.

Such software is designed to streamline and automate non-core tasks, including administrative tasks. Not only that, but these tools will allow you and other members of your legal team to focus on the core aspects of your legal firm.

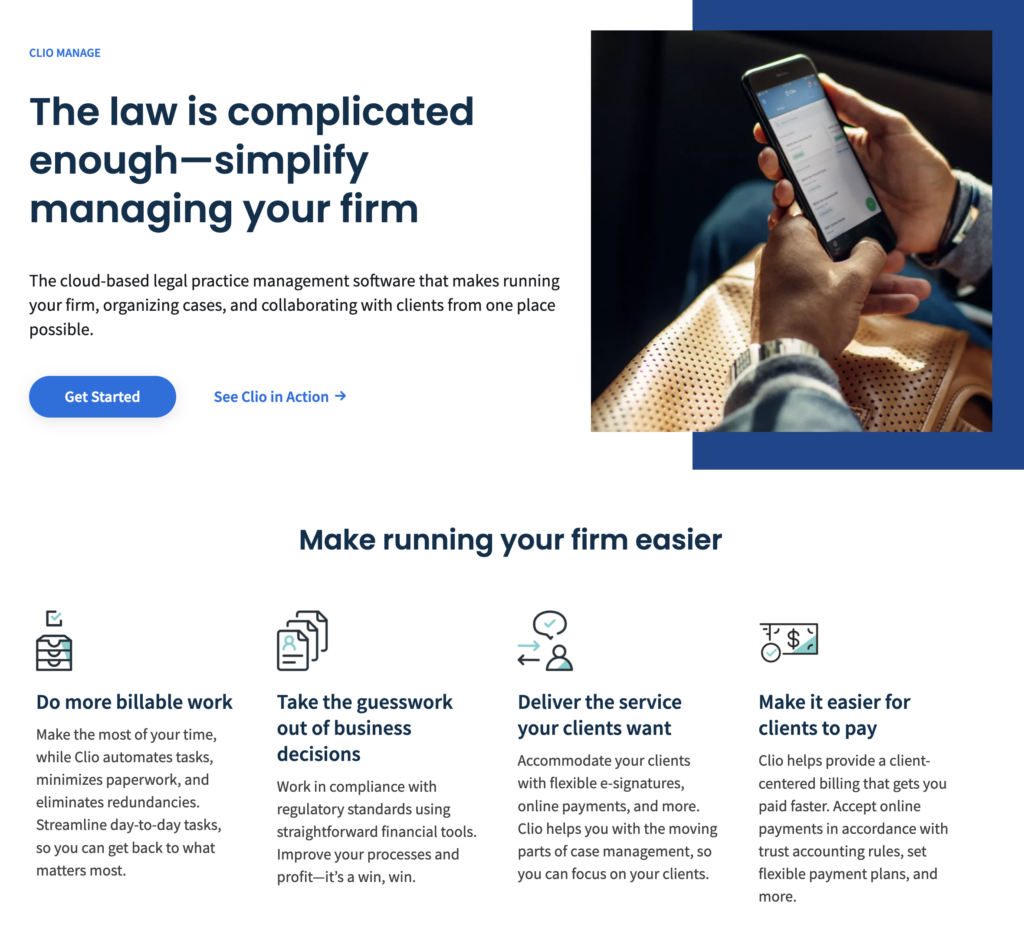

For instance, a typical project management software will help you with case management, time tracking, billing, and invoicing. Clio, Smokeball, and CASEpeer are some of the best practice management software that the market has to offer.

E-Discovery Tools

E-discovery tools are software applications and related tools that help legal professionals collect, process, review, and produce electronic data and other legal documents. They help prepare such documents for legal proceedings, audits, and investigations.

The majority of discovery is made electronically. Although using e-discovery tools will help you save time, you can also use tech tools for lawyers to streamline your discovery process.

Document Management and Automation Software

As their names suggest, these tools help legal teams automate, organize, store, and manage documents related to their cases, clients, and other legal proceedings. They can perform a wide range of tasks, including automating the document creation process, reducing manual data entry, and ensuring the safety of stored documents. Having access to reputable document management and automation software can help streamline workflow, increase productivity and reduce errors. Other common features of these tools include document indexing, version control, collaboration features, and advanced search capabilities. Tucan.ai is one of the best automation tools when it comes to transcribing and summarising conversations with a lot of legal jargon. It helps you document and analyse your calls, meetings and hearings, as well as with repetitive tasks such as data entry and text coding, allowing you to shift your focus on what is actually important at work.

Data Security and Privacy Tools

It’s widespread standard for legal teams to deal with sensitive and confidential information daily. Such information includes confidential client info, financial information, and other sensitive legal documents.

Legal teams are responsible for safeguarding this information and ensuring it doesn't end up in the hands of unauthorized personnel. The most common and widely used data security and privacy tools include data encryption, backup, recovery, password managers and access control tools.

Antivirus software is another security tool you should consider adding to your arsenal. The best antivirus software helps legal teams maintain data security and privacy by protecting their systems from malware, viruses, and other cyber threats. These threats not only compromise sensitive data but can also harm your system's performance and functionality.

You want to test Tucan.ai for your Company?

Book a free consultation call!

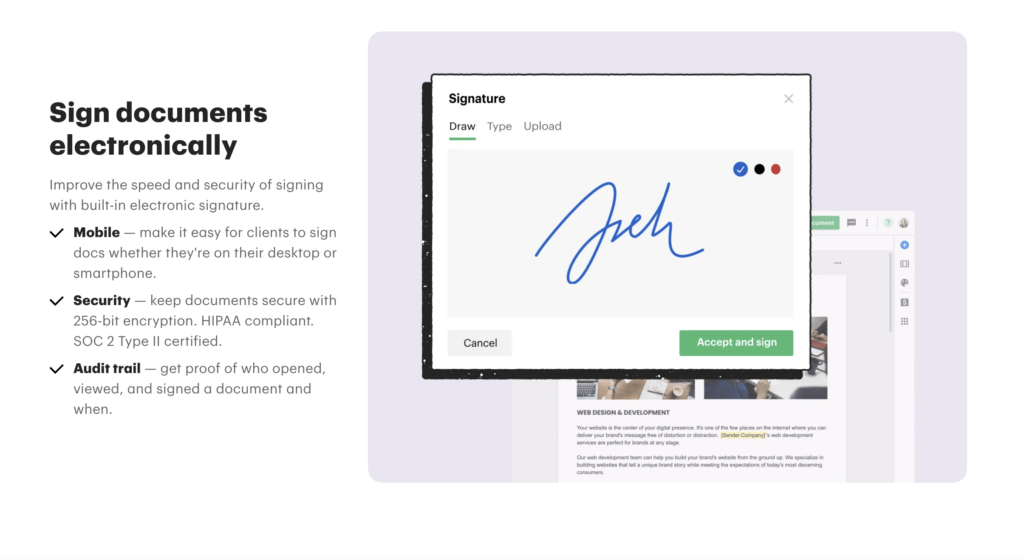

E-Signature Tools

Any legal operation has to involve e-signature software. Such tools are essential for any legal team as they speed up contract conversion while bringing security and transparency to the company’s operations.

E-signature tools are also convenient. They allow you to sign documents from anywhere, at any time, with an internet connection. This means you don't have to be physically present to sign important documents. This can save you a lot of time and hassle. Some of the best digital signature apps for law firms include Docusign, Clio, MyCase, PandaDoc, and SignNow.

Contract Management Software

Contract management systems give legal teams the ability to focus on high-value and more strategic work. Contract management software lets you store all your contracts in one centralized location.

PandaDoc, DocuSign, and Concord are some of the best contract management software with features like centralized storage, automated reminders, and performance monitoring. Besides, you’ll save more on time and resources as tedious administrative tasks tend to be time-consuming.

Legal Analytic Tools

Legal analytic tools are applications designed to help law firms gather, analyze, and interpret information relating to legal cases, and clients. Although they help with information interpretation, these tools are becoming increasingly important for law firms looking to streamline their marketing efforts and improve client satisfaction.

Law firms can gain a better understanding of their target audience and tailor their marketing campaigns accordingly. They can also use the information from analytics to track client satisfaction levels, allowing them to identify areas where they can improve their services and enhance the overall client experience.

Conclusion

Today’s fast-paced legal sector has pushed legal teams to work more effectively and efficiently than ever before. Advancements in tech have made it possible for the same teams to automate many of their routine tasks while streamlining their workflow.

Smart tools have emerged as game-changers for legal teams. Every legal team should use smart tools, including practice management software, legal analytic tools, e-signature tools, document management/ automation software, e-discovery tools, and collaboration and communication tools.

You want to test Tucan.ai for your Company?

Book a free consultation call!

Improving qualitative research with NLP

According to a survey conducted by Qualtrics last year, over 50 percent of decision-makers in market research are convinced they know what AI is in concrete terms. Just about all of them assume that it will have a very big impact on the development of the industry in the coming years.

Moreover, trend researchers at an SAP subsidiary focused on experience management predict that at the latest in five years, at least 25 percent of all surveys will be conducted with the help of digital assistants.

Since the release and spread of ChatGPT and other useful solutions based on large language models (LLM), it has become clear that NLP is rapidly gaining in performance and importance. However, OpenAI's prodigy has not mastered everything already possible with the help of algorithms.

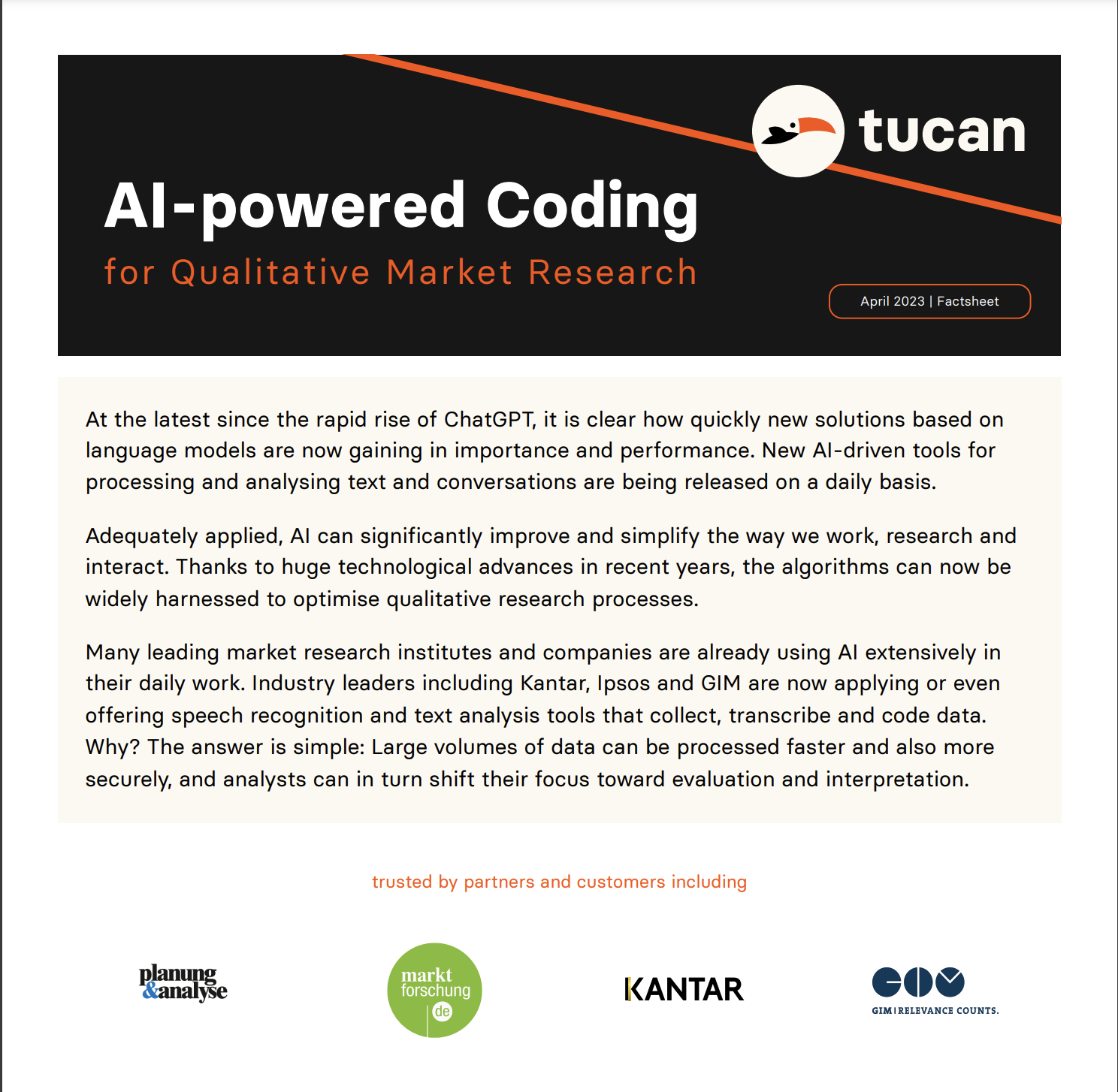

New NLP tools for documenting and analysing conversations are currently presented to the public almost daily. Properly applied, AI can positively impact not only how we communicate with machines, but also the way we humans work, research and interact with each other. Thanks to rapid innovation over the past years and months, NLP-based software can now also be used to optimise qualitative research effectively.

Fundamental methods of NLP

NLP methods and systems for speech recognition and conversational intelligence all have clear strengths and weaknesses, but if they are applied in a targeted, controlled and combined manner, they can deliver a great deal of added value.

The fastest growing area is automatic speech recognition (ASR) which converts spoken words into text. These systems work very precisely and are therefore ideal for tasks such as speaker diarisation, logging and transcription. However, when it comes to recognising multiple speakers in noisy environments as well as accents and dialects, some of these solutions still have their difficulties.

Another innovative NLP element are systems for natural language understanding (NLU), one of the areas in which Tucan.ai is specialising. The technology goes beyond transcribing words and tries to determine the meaning behind them. NLU is perfectly suited for sentiment analysis, encoding, and intention recognition.

Finally, there are conversational AI systems that allow programs to have an informative conversation with a human. Virtual assistants can automatically collect data, generate speech and give personalised answers, but usually still find it difficult to correctly classify subtle forms of speech such as idioms or sarcasm.

You want to test Tucan.ai for your Company?

Book a free consultation call!

Benefits of NLP for researchers

Firstly, if developed and applied adequately, NLP can help reduce analysts' personal biases. Whenever a person perceives something, the content is filtered through subjective experiences and opinions before they can understand it, which naturally introduces a certain kind of bias. A typical countermeasure is to have two analysts working on the same data set, bit this usually takes twice as long and costs much more.

NLP tools are now being increasingly applied by researchers to process, encode and extract findings from interviews and focus groups. One main advantage is the ability to quickly and accurately transcribe large amounts of spoken data and collect free-text data that would else be overlooked.

NLP tools can also be used to analyse transcripts and identify key themes, statements and patterns that are difficult to detect through manual analysis. They help us identify specific nuances of speech, such as tone of voice, intonation and nonverbal cues.

In addition, the automated nature of these AI-powered applications allows us to analyse larger data sets, leading to robuster results in less time. Automating manual processes enables researchers to reallocate certain resources, which makes many of these tools very cost-effective.

Uses cases in qualitative research

Many leading market research institutes and companies are already making extensive use of NLP. Industry leaders such as GfK, Kantar, Ipsos and GIM apply or offer automatic speech recognition and text analysis tools to transcribe and encode language data to better capture and classify evidence. Continue reading for the most popular and important use cases of NLP in qualitative research.

Data collection and procession

Automated data capture involves reading text and recording, transcribing and summarising conversations. With the help of AI, these tasks can not only be outsourced and accelerated, but also made more accurate, objective and transparent. For example, some tools are able to produce literate (interjections), corrected (grammar) and abstract transcripts as needed, helping to scale interviews and focus groups more easily.

Text analysis

Text analysis and topic recognition are effective and established methods for gaining reliable insights from larger text datasets. IBM estimates, for instance, that about 80 percent of data worldwide is unstructured and consequently unusable. Text analysis uses linguistic and machine learning techniques to structure the information content of text datasets. It builds on similar methods as quantitative text mining, in addition to modelling, however, the goal is to uncover specific patterns and trends.

Topic recognition

Topic modelling is an unsupervised technique that uses AI to tag text clusters and group them with topics. It can be thought of as similar to keyword tagging, which is the extraction and tabulation of relevant words from a text, except that it is applied to topics and associated clusters of information. NLP models can also compare the data they have been provided with - like the results of a study - with similar resources. In this way, inconsistencies can be identified and "gaps filled" as the AI "knows the context" of other data pools.

Sentence embedding

Probably the most effective application case of NLP in qualitative research to date is AI-assisted sentence embedding. It involves the assignment of words to matching or meaningful opinions, essentially facilitating the processing of large amounts of data and automated extraction of the most important statements from qualitative interviews.

Keyword recognition

Keyword extraction, or recognition, is an analysis technique that automatically extracts the most frequently used and important words and phrases from a text. It's highly useful for automatically summarising content and better understanding the main topics discussed.

Sentiment analysis

Sentiment analysis aims at identifying the intentions and opinions behind the collected data. While text analysis makes sense the data set, sentiment analysis reveals the underlying emotions expressed through a statement or in a conversation. It reduces subjective feelings to abstract but processable representations. As with any data analysis, however, some nuances get of course lost along the way.

You want to test Tucan.ai for your Company?

Book a free consultation call!

More dynamic and authentic insights

Not only can with the help NLP additional and better qualitative insights be gained, interviews and analyses can also be made much more context-sensitive - for example, because interviewers and moderators follow a script that is continuously adapted by a computer.

NLP offers a multitude of new possibilities for optimising research processes and getting a deeper, more dynamic and authentic understanding of collected data. We will likely soon see much more conversational surveys where follow-up and in-depth questions are asked in real time.

That much is clear: AI is about to revolutionise qualitative research. It is not (yet) able to mimic the deep, explorative abilities of humans. However, we have undoubtedly reached a point at which some programmes can execute certain tasks much faster, cheaper and more reliably than humans.

5 Tucan-Tipps für effektivere Meetings

1. Strukturiert planen

Ein effektives Meeting beginnt mit einer strukturierten Planung. Definiere eine Agenda und ein Ziel der Besprechung, um einen klaren Diskussionsschwerpunkt zu setzen. Zudem ist es in Zeiten von immer mehr Online-Meetings ratsam, klar festzulegen, welche Personen aktiv teilnehmen sollen/müssen und welche bei Interesse auf freiwilliger Basis mitmachen können.

2. Voraussetzungen kommunizieren

Informiere die Teilnehmer_innen mit einer kleinen Erinnerung nochmals über die Schwerpunkte und Ziele des Meetings, um Anwesenheit, Vorbereitung und ein gewisses Einbindungsgefühl sicherzustellen. Gegebenenfalls ist es auch ratsam, sie im Vorfeld über die nötigen Geräte und Tools in Kenntnis zu setzen, die für die Besprechung gebraucht werden.

3. Engagement sicherstellen

Teilnehmer_innen bringen unterschiedliche Persönlichkeiten mit in den Raum. Manche neigen dazu lieber nur zuzuhören, während andere Tendenzen zeigen, das Gespräch dominieren zu wollen. Daher ist es wichtig, dass der Moderator bzw. Koordinator dafür sorgt, dass jede bzw. jeder im Diskurs das Wort ergreifen kann und alle Standpunkte berücksichtigt werden.

4. Fokus auf Nachbereitung

Das greifbare Resultat eines Meetings sollte immer eine Art Zusammenfassung oder Liste mit Punkten wie Follow-Ups, To-Do's sowie den Namen jener, die diese Aufgaben übernehmen sollen, sein. Vergewissere dich, dass diese Personen über die weiteren Maßnahmen bescheid wissen, ihnen zustimmen und sich dazu verpflichten, sie auch tatsächlich auszuführen. Werte dann die Besprechung mit all ihren Outputs aus, um zu ermitteln, was funktionierte und was nicht. Nimm die Takeaways unbedingt in Folgemeetings mit.

5. Feedback einarbeiten

Wie oberhalb erwähnt, müssen sich alle Teilnehmer_innen zu den Resultaten eines Meetings bekennen und anschließend Maßnahmen ergreifen. Positive Effekte in der allgemeinen Performance des Teams sind oft auch ein Anzeichen für effiziente Besprechungen. Um zu solchen Wunschergebnissen zu gelangen, ist eine kontinuierliche Verbesserung erforderlich, sei es in Bezug auf die technische Durchführung oder die Erledigung der Follow-Ups von Meetings. Stets die Übersicht behalten, sollte das Credo lauten.

Meetings sind ja eigentlich eine gute Methode für gemeinsame Ideen- und Entscheidungsfindung, doch schlecht geplant, moderiert und nachbearbeitet können sie wahre Zeitfresser und Produktivitätskiller sein. 30 bis 70 Prozent unserer beruflichen Besprechungen sind aktuellen Studien zufolge überflüssig oder gar kontraproduktiv. Tucan.ai möchte dem mithilfe neuer Technologien entgegenwirken.

Weitere Tipps zu neuen Arbeitsmethoden und Produktivität gewünscht?

Warum folgen Sie uns nicht auf LinkedIn für häufige Aktualisierungen!

5 Golden Rules for a Better Meeting Culture

1. Plan structurally.

A productive meeting starts with structural planning. Set an agenda and a goal to define the focal points for discussion. Especially in case of online meetings you should also clearly communicate whose attendance is expected and who may join in as a passive participant.

2. Communicate requirements.

Inform participants about the topics and goals of the meeting and include a short reminder to ensure attendance, preparation and a certain sense of involvement. If applicable, it is also advisable to let them know in advance about necessary equipment and tools for the meeting.

3. Ensure commitment.

Participants bring different personalities to the room. Some tend to prefer to just listen while others show a tendencies towards striving to dominate a conversation. It is thus important to ensure everyone can speak up and all views are being considered.

4. Focus on follow-ups.

The tangible outcome of a meeting should always be some sort of summary or list of take-aways such as follow-ups and to-do's. Make sure people know about, agree and commit to carrying out follow-up actions. Then evaluate the meeting with all its outputs to determine what has worked and what not. Be sure to include your take-aways in follow-up meetings.

5. Incorporate feedback.

As mentioned above, all participants must be included in the process and commit to their follow-up responsibilities. Positive effects in the overall performance of a team or company are often also indicative of effective meetings. To arrive there continuous improvement is required in terms of technical execution as well as successful completion of follow-ups. The credo must be: Stay on top of things.

Meetings are an established method for developing ideas and making decisions together, but when poorly organised they can kill morale and productivity. According to recent studies, 30 to 70 percent of our professional meetings today are unnecessary or even counterproductive.

Tucan.ai is a Germany-based deep-tech startup developing AI software aimed at improving our professional communication. We build productivity tools based on automatic speech recognition, transcription and summarisation to help B2B customers organise, carry out and post-process meetings and other structured conversations more efficiently and productively. Our vision is to outsource exhausting bureaucratic tasks to AI systems so that we can focus more on what is really important and exciting: being innovative and creative together.

More tips on new work approaches and productivity wanted?

Why don't you follow us on LinkedIn for frequent updates!

Top 5 German Podcasts for Market Researchers

If you're fluent in German or are looking to improve your language skills, there are many excellent German-language podcasts out there that cover a range of topics related to market research and opinion mining. Here are the five most important ones that you won't want to miss:

1. Marktforschung aktuell

Hosted by market research expert Dr. Sebastian Kühn, "Marktforschung aktuell" (or "Market Research Up-to-Date") is a weekly podcast that covers a variety of topics related to the field. In each episode, Kühn interviews leading industry experts and discusses the latest trends and developments in market research.

According to Kühn, one of the goals of the podcast is to make market research more accessible to a wider audience. "Many people don't really know what market research is all about, or they have a distorted image of it," he says. "With 'Marktforschung aktuell,' we want to shed light on the various aspects of market research and show how interesting and diverse the field really is."

2. Marktforschung pur!

"Marktforschung pur!" (or "Pure Market Research") is another great podcast for anyone interested in the field. Hosted by market research professionals Anna Schütz and Christin Beyhl, the podcast covers a wide range of topics, from the latest industry trends and techniques to practical tips for market researchers.

In an interview with the hosts, Schütz emphasised the importance of staying up-to-date with latest developments in the field. "As market researchers, it's essential to constantly learn and evolve to stay relevant and provide valuable insights to our clients," she says. "That's why we started 'Marktforschung pur!' - to share our knowledge and experience with others in the industry."

3. Meinungsforschung im Fokus

"Meinungsforschung im Fokus" (or "Opinion Research in Focus") is a podcast that focuses specifically on the field of opinion mining. Hosted by market research professionals Marcel Büchi and Marc Schneider, the podcast covers a range of topics, including the latest techniques and tools for gathering and analyzing public opinion data.

In an interview with the hosts, Büchi emphasized the importance of understanding public opinion in today's fast-paced, digital world. "With the proliferation of social media and other online platforms, it's more important than ever to track and understand public opinion," he says. "That's what 'Meinungsforschung im Fokus' is all about - helping market researchers and other professionals stay on top of the latest trends and techniques in opinion mining."

4. Marktforschung 2.0

"Marktforschung 2.0" (or "Market Research 2.0") is a podcast that covers the latest trends and developments in the field, with a particular focus on the role of technology and digital tools. Hosted by market research professionals Martin Fischer and Thomas Häussler, the podcast features interviews with industry experts and discussions on many topics, including data analytics, customer experience, and market research software.

Fischer emphasizes the importance of staying on top of technological advancements in the field. "As market researchers, it's essential to keep up with the latest technologies and how they can be used to gather and analyze data," he says. "That's what 'Marktforschung 2.0' is all about - helping professionals stay up-to-date and succeed in an increasingly digital world."

5. Die Marktforscher

"Die Marktforscher" (or "The Market Researchers") is a podcast that offers a broad overview of the market research industry. Hosted by market research professionals Julia König and Michael Schmieder, the podcast covers a range of topics, including industry trends, best practices, and case studies.

In an interview with the hosts, König emphasized the importance of staying current in the field. "As market researchers, it's essential to stay on top of the latest trends and techniques in order to provide valuable insights to our clients," she says. "That's what 'Die Marktforscher' is all about - helping professionals stay up-to-date and succeed in their careers."

These are just a few of the many excellent German-language podcasts out there for market researchers and opinion miners. Whether you're looking to learn about the latest industry trends, improve your skills, or simply stay up-to-date, these podcasts are a great resource to add to your list.

Nutzung von Informationen mit Sentiment-Analyse

Was ist Stimmungsanalyse?

Die Sentimentanalyse ist ein Verfahren zur Klassifizierung, ob ein Text- oder Sprachblock als positiv, negativ oder neutral zu bewerten ist. Aber konzentriert sie sich nur auf die Polarität? Ganz und gar nicht. Mit Hilfe von Algorithmen ermöglicht es Einblicke in ausgedrückte Emotionen wie Freude und Ärger.

In der Softwareentwicklung ist die Sentiment-Analyse neben der Themenmodellierung und der Named-Entity-Erkennung eine der drei zentralen Säulen der Deep-Data-Analyse. Leider gibt es keine allgemein anerkannte Definition.

Seit den späten 1970er Jahren beschäftigen sich Sprach- und Sozialwissenschaftler mit der Analyse von Tonalitäten in Texten. In jüngerer Zeit hat die zunehmende Entwicklung neuronaler Netze zu völlig neuen Anwendungsbereichen und Ansätzen der Stimmungsanalyse geführt.

Auch als "Opinion Mining" oder "Emotion AI" bekannt, kann die Sentiment-Analyse unter anderem für ein besseres Verständnis der Kundenbedürfnisse, Analysen von Bewertungen und Entscheidungsprozessen sowie für Empfehlungsprogramme genutzt werden.

"Mit Hilfe der KI-Sentiment-Analyse wollen wir Kunden helfen, die in Gesprächen ausgedrückten Emotionen besser zu verstehen."

Tucan.ai nutzt die Stimmungsanalyse auch, um die Konversationsanalysefunktionen seines Software-Toolkits zu verbessern. "Derzeit befinden wir uns noch in der standardisierten Schulungsphase. Bald werden unsere Nutzer aber in der Lage sein, die aus einem Absatz oder Textteil herausgelesenen Stimmungen selbst zu verändern. So können wir die KI gezielt mit kundenspezifischen Daten trainieren, damit sie immer präzisere Vorhersagen machen kann", sagt Mohammed Aymen Ben Slimen, der seit Anfang des Jahres das Machine-Learning-Team von Tucan.ai unterstützt.

"Mit Hilfe der KI-Sentimentanalyse wollen wir den Kunden helfen, die in Gesprächen ausgedrückten Emotionen besser zu verstehen. Wenn ein Sprecher zum Beispiel einem Thema zustimmt, zeigen wir ein fröhliches Emoji an, und wenn sich jemand beschwert, wird dies durch ein trauriges Emoji gekennzeichnet", erklärt Aymen.

Wie funktioniert die Stimmungsanalyse?

Die Sentimentanalyse ist ein Teilgebiet der natürlichen Sprachverarbeitung, d.h. der algorithmischen Verarbeitung und Auswertung von natürlicher Sprache in Form von Text- oder Sprachdaten. Entsprechende KI-Systeme versuchen, die Meinung des Absenders automatisch zu erfassen. Im Wesentlichen wurden drei zentrale Aspekte des Prozesses bewertet:

- Inhalt: Über welche zentralen Themen sprechen die Redner?

- Polarität: Welche positiven und negativen Meinungen werden von wem geäußert?

- Meinungsführerschaft: Wer vertritt welche Meinung zu welchem Thema?

Im Kern ist die Stimmungsanalyse ein einfaches Verfahren: Ein Text wird zunächst in Komponenten wie Sätze, Phrasen oder kleine Wortteile zerlegt. Dann werden die einzelnen emotionalen Komponenten ermittelt. Diese werden dann bewertet, zum Beispiel mit "-1" und "+1". In einer mehrschichtigen Stimmungsanalyse werden diese Bewertungen ebenfalls kombiniert.

Arten der Stimmungsanalyse

Die gebräuchlichste Art der Stimmungsanalyse konzentriert sich auf Gegensätze oder Polarität, aber auch andere können bestimmte Gefühle und Emotionen identifizieren. Die gebräuchlichsten Formen sind:

- Standard-Stimmungsanalyse

- Erkennung von Emotionen

- Feinkörnige Stimmungsanalyse

- Mehrsprachige Stimmungsanalyse

- Aspektbasierte Stimmungsanalyse (ABSA)

- Aufdeckung von Absichten

Standard-Stimmungsanalyse

Die standardmäßige Stimmungsanalyse ermittelt den Grad einer Meinung und stuft sie als positiv, negativ oder neutral ein. Hier ist ein Beispiel:

- Positiv: "Ich liebe dieses neue Theater".

- Neutral: "Ich bin mir nicht sicher. Das Stück hat mir gefallen, aber das Ambiente hat mir nicht gefallen."

- Negativ: "Das Theaterstück war schrecklich, ebenso wie die allgemeine Stimmung und der Service."

Erkennung von Emotionen

Die Emotionserkennung ermöglicht es, die einem Text zugrunde liegenden Emotionen wie Freude, Ärger und Frustration zu erkennen. Beim maschinellen Lernen mit Lexika ist diese Art von Daten besonders schwierig. Die Verwendung eines Lexikons kann die Emotionen der Kunden definieren, aber Menschen drücken ihre Emotionen oft auf sehr unterschiedliche Weise aus. So können beispielsweise Wörter, die eine negative Konnotation haben, auch eine positive Bedeutung haben (z. B. "krank").

Mehrsprachige Stimmungsanalyse

Die mehrsprachige Stimmungsanalyse ist sehr anspruchsvoll und vergleichsweise schwierig. Sie umfasst die Klassifizierung und Verarbeitung mehrerer Sprachen. Alternativ können auch Sprachklassifikatoren verwendet werden, um die Stimmungsanalyse zu trainieren und an die Bedürfnisse anzupassen, z. B. an die bevorzugte Sprache.

Feinkörnige Stimmungsanalyse

Bei dieser Methode, die auch als abgestufte Stimmungsanalyse bezeichnet wird, können Sie zusätzlich zu den positiven, negativen oder neutralen Kategorien noch einige weitere hinzufügen. Sie ist den 5-Sterne-Bewertungen sehr ähnlich und wird in fünf Segmente unterteilt: sehr positiv, positiv, neutral, negativ und sehr negativ.

Absichtserkennung

Wie der Name schon sagt, analysiert die Absichtserkennung einen Text, um die Absicht hinter einer bestimmten Meinung zu verstehen. Sie erkennt wertvolle Kundenmeinungen zur Lösung eines Problems oder zur Verbesserung eines Produkts oder einer Dienstleistung an. Die Absichtserkennung kann auch vorhersagen, ob ein Kunde beabsichtigt, ein Produkt zu verwenden, indem er ein Muster beobachtet und erstellt, was für Werbung und Marketing nützlich ist.

Aspektbasierte Stimmungsanalyse

Die aspektbasierte Stimmungsanalyse konzentriert sich auf die Identifizierung von Merkmalen oder Aspekten einer Entität oder Meinung, z. B. von Produktbewertungen. So setzen sich beispielsweise Bewertungen häufig aus mehreren Meinungen zu Produktmerkmalen wie Benutzeroberfläche, Preis, mobile Versionen, Integrationen und vielem mehr zusammen. Mit anderen Worten, es handelt sich um einen detaillierteren Ansatz für die Analyse von Bewertungen.

Techniken der Stimmungsanalyse

Im Laufe der Zeit hat sich eine Vielzahl von Techniken und Technologien rund um die Stimmungsanalyse entwickelt. Insbesondere die jüngsten Fortschritte im Bereich des maschinellen Lernens und der neuronalen Netze haben zu raschen Verbesserungen in der NLP im Allgemeinen und der Stimmungsanalyse im Besonderen geführt.

Im Fall von Tucan.ai ist der erste Schritt ein Deep-Learning-basiertes Modell, das die Stimmung eines bestimmten Absatzes oder Textteils (positiv, negativ oder neutral) vorhersagen kann. "Nachdem wir das Transkript erstellt haben", sagt Aymen, "übergeben wir es an ein NLP-Stimmungsanalysemodell, das Stimmungen vorhersagt und Emojis anzeigt, die mit dem Ergebnis in Verbindung stehen."

Regelbasierte Stimmungsanalyse

Bei diesem einfacheren Ansatz zur Textanalyse werden keine Trainings- oder ML-Modelle verwendet. Hier klassifiziert die Software Textteile auf der Grundlage ausgefeilter linguistischer Regeln. Diese Regeln werden auch als Lexikons bezeichnet. Daher wird der regelbasierte Ansatz auch als lexikonbasierter Ansatz bezeichnet. Weit verbreitete regelbasierte Ansätze sind TextBlob, VADER und SentiWordNet.

Maschinelles Lernen

Im Gegensatz zu regelbasierten Systemen werden dem maschinellen Lernalgorithmus keine Regeln vorgegeben, sondern das System lernt sie selbst. Dazu ist ein Trainingsdatensatz erforderlich, bei dem die Eingabe (Satz, Absatz, Text) mit einer Markierung (negativ, positiv, neutral) versehen wird. Damit der Algorithmus funktionieren kann, wird der Text in eine numerische Darstellung umgewandelt. Bei dieser Darstellung handelt es sich in der Regel um einen Vektor, der Informationen über den Text enthält, z. B. die Häufigkeit eines Begriffs in einer Wortgruppe. In letzter Zeit sind "Worteinbettungen" - bei denen semantische Informationen in Vektoren zu Wörtern gespeichert werden können - sehr beliebt geworden.

Tiefes Lernen

Deep Learning ermöglicht die Verarbeitung von Daten in einer viel komplexeren Weise. Ein LSTM-Modell (Long Short-Term Memory) ist eine Art rekurrentes neuronales Netz (RNN), das zur Verarbeitung zeitlicher Daten verwendet wird. Da wir davon ausgehen, dass die Reihenfolge der Merkmale (Wörter) in einem Satz wichtig ist, verwenden wir diese neuronale Netzarchitektur. Deep Learning ist rechenintensiv und eignet sich nicht für hochdimensionale spärliche Vektoren (schlechte Leistung und langsame Konvergenz).

Wenn wir für das Modelltraining Merkmale aus dem Originaltext extrahieren, müssen wir sie als dichte Vektoren darstellen. Bei dieser Technik wird jeder Text in eine Folge von Zahlen umgewandelt, wobei jede Zahl einem Wort aus dem Vokabular zugeordnet ist. In einem weiteren Schritt müssen wir Wörter, die eine ähnliche Verwendung/Bedeutung haben, ähnlichen reellen Zahlenvektoren zuordnen (anstelle eines Index), indem wir auf die bereits oben erwähnten Einbettungen zugreifen. Ohne sie würde das Modell die Indexzahl der Wörter als Bedeutung fehlinterpretieren. Bei der "Worteinbettung" werden alle Wörter in einen mehrdimensionalen Vektorraum eingebettet, so dass ihre Ähnlichkeiten anhand der Entfernung gemessen werden können.

Anwendung der Stimmungsanalyse

Etwa 80 Prozent aller Daten, die im Rahmen einer Sentiment-Analyse - sei es durch Menschen oder Computer - erhoben werden können, sind unstrukturiert und entziehen sich klassischen Analyseansätzen. Die gezielte Nutzung dieser Daten kann für Unternehmen oder Organisationen einen immensen Wettbewerbsvorteil bedeuten. Allerdings übersteigt die Datenmenge in der Regel die Möglichkeiten der menschlichen Analyse. Die Fähigkeit, riesige Datenmengen in kurzer Zeit zu verarbeiten, ist ein starkes Argument für den Einsatz automatisierter Systeme zur Stimmungsanalyse.

Mithilfe von maschinellem Lernen verwandelt die Stimmungsanalyse alle unstrukturierten Daten, die aus Transkripten, Chatbots, sozialen Medien, Umfragen usw. gesammelt werden, in aussagekräftige Informationen. Sie ist eine leistungsstarke KI-Ressource, die zur nachhaltigen Verbesserung der Entscheidungsfindung eingesetzt werden kann, wie die folgenden beispielhaften Anwendungsfälle zeigen:

Steigerung der Effizienz von Meetings

Jedes Besprechungsthema kann durch eine Stimmungsanalyse analysiert werden, die auf den Äußerungen der einzelnen Teilnehmer basiert, um zu dokumentieren, wie sie das Thema emotional empfinden. So lassen sich Missverständnisse, Doppelungen, Wiederholungen und Unklarheiten leichter vermeiden.

Coaching - Stimmungsanalyse im Verkaufsgespräch

Die Sentimentanalyse hilft auch bei der Dokumentation und Auswertung von Verkaufsgesprächen sowie beim Coaching von Call Agents und Beratern. Im operativen Bereich wird es häufig als Instrument zur Leistungsmessung eingesetzt, um das Einfühlungsvermögen oder die emotionale Intelligenz des Verkaufspersonals bei Interaktionen mit Kunden zu bewerten. Die Stimmungsanalyse spielt auch eine wichtige Rolle beim Coaching von Verkäufern zur Verbesserung ihrer Gesprächsführung.

Lead Scoring in der Stimmungsanalyse

Mit Hilfe der Stimmungsanalyse kann auch das Interesse potenzieller Kunden gemessen werden, und zwar durch Lead Scoring. Die Werte werden auf einer Skala von -1 bis 1 angegeben, wobei das untere Ende negative Antworten und das obere Ende positive Antworten anzeigt.

Überwachung der Marke

Kundenfeedback und Online-Konversationen sind wichtige Aspekte der Markenüberwachung. Abgesehen von den sozialen Medien können Gespräche auch in Nachrichten, auf Websites, in Bewertungsblogs oder in Foren geführt werden. Das Hören auf die Stimmen der Kunden mit Hilfe von Textanalyse und Stimmungsextraktion kann helfen, ihre Einstellung zu einem Produkt oder einer Dienstleistung besser zu verstehen.

Überwachung sozialer Medien

Wussten Sie, dass jede Minute rund 500.000 Tweets online gehen, die wertvolle Erkenntnisse über Marken, Produkte und Dienstleistungen enthalten können? Mit der Twitter-Stimmungsanalyse können Unternehmen die Emotionen extrahieren, die den Gesprächen in sozialen Medien zugrunde liegen. Es hilft zu verstehen, wie Menschen über ein Thema sprechen und warum.

Kundenbetreuung

Die Beobachtung von Marken und sozialen Medien gibt uns einen guten Einblick in die Stimmung der Kunden. Aber wussten Sie, dass die Stimmungsanalyse von Telefonanrufen für gezieltes Marketing, Vertrieb und Kundenerfolg genutzt werden kann? Dies ist z. B. in Callcentern oder Kundenbetreuungsteams nützlich und üblich. Denn wie wir alle wissen: Ein guter Kundenservice bedeutet in der Regel eine höhere Kundenbindung.

Marktforschung durch Stimmungsanalyse auf Twitter

Durch die Analyse der Stimmung von Tweets, die sich auf das Produkt beziehen, können Sie systematisch herausfinden, was die Leute über Ihr Produkt denken. Finden sie es nützlich? Erfüllt sie tatsächlich ihren Zweck? Vielleicht sind sie mit dem Preis unzufrieden oder wünschen sich eine neue Funktion.

Solche Erkenntnisse können Unternehmen dabei helfen, ihr Geschäft umzugestalten und verschiedene strategische Maßnahmen zu ergreifen, zum Beispiel:

- Gewinnen Sie neue Kunden;

- wettbewerbsfähiger werden;

- Reduzierung des Kundendienstes;

- die Marke rentabler zu machen;

- mehr Dienstleistungen zu verkaufen;

- bestehende Kunden zu binden;

- und Marketingkampagnen zu verbessern.

Sie wollen Tucan.ai für Ihr Unternehmen testen?

Buchen Sie einen kostenlosen Beratungstermin!

Leveraging Information with Sentiment Analysis

What is sentiment analysis?

Sentiment analysis is a procedure for classifying whether a block of text or speech is to be evaluated as positive, negative or neutral. But does it only focus on polarity? Not at all. With the help of algorithms, it facilitates insights into expressed emotions such as joy and anger.

In software development, sentiment analysis is one of the three central pillars of deep data analysis, along with topic modelling and named entity recognition. Unfortunately, there is no generally accepted definition.

Since the late 1970s, linguists and social scientists have been working on the analysis of tonalities in texts. More recently, the increasing development of neural networks has led to completely new fields of application and approaches to sentiment analysis.

Also known as "opinion mining" or "emotion AI", sentiment analysis can be used, among other things, for a better understanding of customer needs, analyses of reviews and decision-making processes, as well as for referral and recommendation programmes.

"With the help of AI sentiment analysis we want to help customers better understand the emotions expressed in conversations."

Tucan.ai is also using sentiment analysis to improve the conversation analysis capabilities of its software toolkit. "Currently, we are still in the standardised training phase. Soon, however, our users will be able to change the sentiments read from a paragraph or text part themselves. This will allow us to train the AI specifically with customer-specific data so that it can make increasingly precise predictions," says Mohammed Aymen Ben Slimen who has been supporting the machine learning team of Tucan.ai since the beginning of this year.

"With the help of AI sentiment analysis we want to help customers better understand the emotions expressed in conversations. For example, if a speaker agrees with a topic, we show a happy emoji, and if someone complains, this is marked by a sad emoji," explains Aymen.

How does sentiment analysis work?

Sentiment analysis is a subfield of natural language processing, i.e. the algorithmic processing and evaluation of natural language in the form of text or speech data. Corresponding AI systems try to automatically capture the opinion of the sender. In essence, three central aspects in the process have been evaluated:

- Content: What central topics are speakers talking about?

- Polarity: Which positive and negative opinions are expressed by whom?

- Opinion leadership: Who represents which opinion on which topic?

At its core, sentiment analysis is a straightforward process: a text is first broken down into components such as sentences, phrases or small word parts. Then the individual emotional components are identified. These are then rated, for example with "-1" and "+1". In a multi-layer sentiment analysis, these ratings are also combined.

Types of sentiment analysis

The most common type of sentiment analysis focuses on opposites or polarity, but others can also identify specific feelings and emotions. The most common forms are:

- Standard sentiment analysis

- Emotion recognition

- Fine-grained sentiment analysis

- Multilingual sentiment analysis

- Aspect-based sentiment analysis (ABSA)

- Intention detection

Standard sentiment analysis

Standard sentiment analysis identifies the degree of an opinion and classifies it as positive, negative or neutral. Here is an example:

- Positive: "I really love this new theatre."

- Neutral: "I'm unsure. I liked the play, but I didn't enjoy the ambience."

- Negative: "The play was terrible and so was the overall vibe and service."

Emotion recognition

Emotion recognition makes it possible to recognise the underlying emotions of a text, such as joy, anger and frustration. In machine learning using lexicons, this type is particularly challenging. Using a lexicon can define emotions of clients, but people often express emotions in very different ways. For example, words that have a negative connotation can also have a positive meaning (e.g. sick).

Multilingual sentiment analysis

Multilingual sentiment analysis is very challenging and comparatively tricky. It involves the classification and processing of multiple languages. Alternatively, language classifiers can be used to train and adapt sentiment analysis to needs, e.g. preferred language.

Fine-grained sentiment analysis

This method, also called graded sentiment analysis, allows you to add a few more categories in addition to positive, negative or neutral ones. It is very similar to 5-star ratings and is divided into five segments: very positive, positive, neutral, negative and very negative.

Intent recognition

As the name suggests, intent detection analyses a text to understand the intent behind a particular opinion. It recognises valuable customer opinions for solving a problem or improving a product or service. Intention recognition can also predict whether a customer intends to use a product by observing and creating a pattern, which is useful for advertising and marketing.

Aspect-based sentiment analysis

Aspect-based sentiment analysis focuses on identifying features or aspects of an entity or opinion, such as product reviews. For example, reviews are often composed of multiple opinions about product features such as user interface, price, mobile versions, integrations, to name a few. In other words, it is a more granular approach to analysing reviews.

Sentiment analysis techniques

Over time, a variety of techniques and technologies have evolved around sentiment analysis. In particular, recent advances in machine learning and neural networks have led to rapid improvements in NLP in general and sentiment analysis in particular.

In the case of Tucan.ai, the first step is a deep-learning based model that can predict the sentiment of a particular paragraph or part of a text (positive, negative or neutral). "After we create the transcript," says Aymen, "we pass it to an NLP sentiment analysis model that predicts sentiments and displays emojis related to the result."

Rule-based sentiment analysis

In this, simpler approach to text analysis, no training or ML models are used. Here, the software classifies parts of text based on sophisticated linguistic rules. These rules are also called lexicons. Therefore, the rule-based approach is also called lexicon-based approach. Widely used rule-based approaches are TextBlob, VADER and SentiWordNet.

Machine learning

In contrast to rule-based systems, no rules are given to the machine learning algorithm, but are learned by the system itself. This requires a training set of data where the input (sentence, paragraph, text) is assigned a tag (negative, positive, neutral). In order for the algorithm to work, the text is converted into a numerical re-presentation. This representation is usually a vector that holds information about the text, in a bag of words approach e.g. the frequency of a term. Recently, "word embeddings" - where semantic information can be stored in vectors to words - have become very popular.

Deep learning

Deep learning allows data to be processed in a much more complex way. An LSTM (Long Short-Term Memory) model is a type of recurrent neural network (RNN) used to process temporal data. Since we assume that the order of features (words) in a sentence is important, we use this neural network architecture. Deep Learning is computationally intensive and is not suitable for high-dimensional sparse vectors (poor performance and slow convergence).

When we extract features from the original text for model training, we need to represent them as dense vectors. In such a technique, each text is converted into a sequence of numbers, where each number is assigned to a word in the vocabulary. Taking this further, we need to assign words that have similar usage/meaning to similar real number vectors (rather than an index) by accessing the embeddings already mentioned above. Without them, the model would misinterpret the index number of words as meaning. With "word embeddings", all words are embedded in a multi-dimensional vector space so that their similarities can be measured by distance.

Application of sentiment analysis

About 80 per cent of all the data that can be collected in a sentiment analysis - whether by human or computer - is unstructured and eludes classical approaches to analysis. The targeted use of this data can mean an immense competitive advantage for companies or organisations. However, the volume of data usually exceeds the possibilities of human analysis. The ability to process huge amounts of data in a short time is a powerful argument for using automated systems for sentiment analysis.

Using machine learning, sentiment analysis transforms all the unstructured data collected from transcripts, chatbots, social media, surveys, etc. into meaningful information. It is a powerful AI resource that can be used to improve decision-making in a sustainable way, as demonstrated by the following exemplary use cases:

Increase meeting efficiency

Each meeting topic can be analysed through sentiment analysis based on what each participant says to document how they feel about it emotionally. This makes it easier to avoid misunderstandings, duplications, repetitions and ambiguities.

Coaching - Sentiment analysis in sales talks

Sentiment analysis also helps in the documentation and evaluation of sales calls as well as in the coaching of call agents and consultants. In the operational area, it is often used as a performance measurement tool to evaluate the empathy or emotional intelligence of sales staff through interactions with customers. Mood analysis also plays an important role in coaching salespeople to improve their conversational skills.

Lead scoring in sentiment analysis

Sentiment analysis can also measure the interest of potential customers through lead scoring. Scores are presented on a scale of -1 and 1, with the lower end indicating negative responses and the upper end indicating positive responses.

Brand monitoring

Customer feedback and online conversations are important aspects of brand monitoring. Apart from social media, conversations can also take place in news, on websites, on review blogs or in forums. Listening to customers' voices using text analytics and sentiment extraction can help to better understand their attitudes towards a product or service.

Social media monitoring

Did you know that around 500,000 tweets go online every minute and these can contain valuable insights about brands, products and services? Twitter sentiment analysis allows companies to extract the emotions underlying conversations on social media. It helps to understand how people talk about a topic and why.

Customer care

Monitoring brands and social media gives us great insights into customer sentiment. But did you know that sentiment analysis in phone calls can be used to target marketing, sales and customer success? This is useful and common in call centres or customer care teams, for example. Because as we all know: Good customer service usually means higher customer retention.

Market research through Twitter sentiment analysis

By analysing the sentiment of tweets related to the product, you can systematically find out what people think about your product. Do they find it useful? Does it actually fulfil its purpose? Perhaps they are dissatisfied with the price or wish for a new function.

Such insights can help companies transform their business and take different strategic actions to, for example:

- Attract new customers;

- become more competitive;

- reduce customer service;

- make the brand more profitable;

- sell more services;

- retain existing clients;

- and improve marketing campaigns.

You want to test Tucan.ai for your Company?

Book a free consultation call!